The Vertical Stage:

The Transformation of the Scene in New Live Audiovisual Performances

The Vertical Stage:

The Transformation of the Scene in New Live Audiovisual Performances

Arnau Horta

1. The computer as an instrument

Since their democratization in the mid-nineties, laptops have become a very common musical instrument, often used in live performances. Thanks to new powerful processors and the versatility of multiple applications and software, the creation and reconfiguration of virtually any kind of sound seems possible today. Moreover, thanks to the portability of computers, this brand new infinite palette of sounds can be reproduced easily and instantly almost anywhere. All of these developments have decisively contributed to the advent of a new live sound creation paradigm and have thrown the traditional musical concepts into crisis, with the appearance of an unprecedented and revolutionary figure: the computer soloist.

However, the sound aesthetics that can be associated to this new typology of meta-instruments have not appeared spontaneously. The majority of the music created through digital tools incorporates some of the major advances (and also some of the revolutionary breaks) that took place within some twentieth century’s musical avant-garde movements. It is therefore necessary at this point, before addressing other issues, to make a brief tour through some key events in the chronology of the experimental music of the last century. We do not intend to be exhaustive. We will focus on two episodes that seem especially important in the context of this study: futurist music and “Musique Concrète”.

2. A noisy century

Researching alternatives (mainly graphical) for traditional musical notation and seeking new sounds through new procedures and devices were two of the most common areas of interest among the last century`s experimental musicians and composers. John Cage, a key figure in the chronology of twentieth century music, art, and thought, was a key figure in this sense.

Cage defended a new way of understanding music creation, based on the possibility of using any type of sound (musical or not, intentionally or randomly produced), and various compositional and interpretive procedures based on new systems of notation. In his text “The Future of Music: Credo” (1937), Cage stated that the old idea of "music" should be replaced for a broader concept; a new notion, completely disengaged from any restrictions. Cage proposed an organization of sounds based on new instruments and systems; different from those that had been used until then. “In the past, there was a discrepancy between consonance and dissonance, while in the immediate future there will be a discrepancy between noise and the, so-called, musical sounds”, wrote Cage.

Almost thirty years before, Luigi Russolo proclaimed in his manifesto-letter "The Art of Noises" (1913) the need to end up with the “narrow circle of pure sounds” and conquer “the infinite variety of noise-sounds”. "We can’t help seeing the huge set of forces representing the modern orchestra without feeling the deepest disappointment with their paltry acoustic results”, said Russolo.

This fascination with new sounds, especially those produced by industrial mechanisms and war machinery, led the futurist musicians to design and construct a new series of noise-generating devices, which they called “Intonarumori”. Driven by cranks, levers or even electric motors, these devices were used to create a wide range of new sounds, presented in a revolutionary reinvention of the orchestra.

The performance and sound characteristics of these devices required a new form of musical writing, different from that used in traditional music notation. Re-thinking the structure of the score, Russolo invented an “enharmonic graphical system” which, unlike conventional notation (based on subdivisions of semitones), allowed him to write any kind of tone by drawing upstream and downstream lines. This new form of linear notation fit the “dynamic continuity” of futuristic compositions and differed from the "fragmentary dynamism” of the traditional notation system, based on the principle of physical and static notes.

Another key event in the chronology of the last century’s music took place in France in the late forties. Taking advantage of technological advances achieved in the field of sound, recording during Second World War, Pierre Schaeffer started one of the most revolutionary movements in the history of music: Musique Concrète.

If forty years before, the Futurists had had to adapt the musical score to the new system of graphic notation required by their intonarumori, Musique Concrète composers were free to abandon it completely. Their music was technologically mediated music, a form of sound composition based on the recording of the specific ("concrète") physical characteristics of sound on magnetic tape. Years later, Michel Chion referred to this type of sound creation as “an art of fixed sounds”.

3. Sound creation in the age of digital reproduction

Today, a new generation of musicians has acquired an important number of the experimental procedures of the two after-mentioned musical avant-garde movements and has adapted them to the current cultural and technological context. Just like the futurists created their music under the fascination produced by the energetic sounds of industry and warfare, the musicians who use the computer as a musical instrument today are often imbued by the pervasive and intangible electronic information flow that surrounds us. The futurist celebration of industrial machinery has been replaced by a new sound aesthetic, based on present electronic technologies.

Digitally coded and stored, sounds can be transformed and reorganized through multiple techniques that would not be possible in the context of any other technological medium. A good example of this is the "glitch", the sound produced by an accidental error in the reading process of a digital file, which has become a recurring creative resource in computer music. Another common procedure would be "time stretching", a process that allows changing the speed or duration of an audio signal without affecting its height or "pitch". The "pitch shifting" is the reverse effect: the process of changing the height without affecting the speed. These and many other methods are part of a new form of musical composition deeply determined by the use of digital instruments.

At this point, we are reminded of Marshall McLuhan's famous premise according to which the technology (in this case digital) becomes an extension of the human being (in this case the artist) that actively shapes the outcome of a particular communication process (in this case creating sound creation). "From a practical and operational point of view" wrote McLuhan in “Understanding Media. The Extensions of Man" (1964), "the medium is the message. This simply means that the individual and social consequences of any medium, that is, any of our extensions, result from the new scale introduced in our affairs by any new extension or technology".

Thirty years before McLuhan, Walter Benjamin had already described the decisive influence of technical reproduction processes both in the creative process and the reception of the artwork. In the pages of his book "The Work of Art in the Age of Mechanical Reproduction” (1935), Benjamin writes: Among many historical periods, just as the mode of existence of human groups does, the sensory perception is also transformed. The way of structuring human perception (the way in which this perception takes place) is conditioned not only naturally but also historically.

Indeed, music started to undergo an historical transformation when recording techniques made the “crystallization” of sound in a physical medium and its subsequent reproduction, possible. Since then, scored music (this is: as a set of abstract information potentially audible through the implementation of an interpreter) rivals with its new specific (“concrète”) and reproducible nature. In this respect, the first definition of Musique Concrète proposed by Pierre Schaeffer in 1948 in the pages of the magazine Polyphonie is very illustrative: "We use the word abstract to label that music that is usually conceived by the human spirit first, then theoretically written and, finally, presented as an instrumental performance. Instead, we call our music “Concrète” because it is made of previously existing elements, not mattering that these are sounds, fragments of music or just noises, and then they are arranged as a direct construction". Years later, Michel Chion would summarize the consequences of the birth of Musique Concrète with these words: "an art of the text became a “concrète” art".

Emancipated from the score through its fixation in a physical medium, Musique Concrète incorporated a procedure that until then had exclusively existed in film: editing. Editing is also the organizing principle that prevails in computer music. The various types of software and applications used for digital music creation are largely based on graphic representations of sounds, displayed on the screen of the computer, interface or any other device. Through these visual representations, electronic musicians can organize sounds and modify them in various ways, just like a film editor does with film.

This new visual (and, thus, virtual) ontology of sound and the inclusion of the editing process in digital music composition and performance, lead us back to Walter Benjamin and his thoughts on film. "In film" Benjamin writes, "we found, for the first time, a form of art that is completely determined by its reproducibility. With film, a crucial new quality of the artwork has overcome (...): its ability to be improved. A film is anything but a finished creation achieved at once; It’s assembled from many images, among which the editor can choose (images that, actually, have already been chosen and adjusted at will by the cameraman)”. "Finally”, Benjamin asserts, "at the time of the artwork produced through an editing process, the decay of plasticity is inevitable".

The similarities between the roles of film editor pointed out by Benjamin, and those of the musicians that use tape or computer as a tool, are quite obvious. Just as the editor constructs the film, arranging different images, previously recorded by the camera, these musicians use a wide palette of synthesized or sampled sound fragments as raw material. Just like a film, their music is deeply determined by the reproducible nature of each element involved and can also be improved during the production process. And, again, just like in the editing process of a film, the “mediatization” through different devices allows a real time review and correction of the work.

In contrast, unlike the editing process of a film, which takes place on a separate stage, prior to its screening, live music is created in real time. Improvisation, another key process in twentieth century’s music, often plays a very important role in technologically mediated music, often based on this possibility to intervene and fix parameters at the very same time of production. Such "openness" represents a whole new dimension in the musical creation process, offering infinite possibilities in terms of improving the work, just like Benjamin described when analyzing film editing process.

Musician and theorist Roger Dean refers to the possibilities offered by computers in the context of improvisation as "hyperimprovisation". “The use of computers" writes Dean in "Hyperimprovisation: Computer-Interactive Sound Improvisation" (2003), "allows musicians to create and edit large sound structures in a polyphonic and real time situation. Computers also allow the construction of hyperinstruments with many levels of explicit control in the generation and processing of sounds. Furthermore, improvisation within a network allows mutual (or competitive) adaptation of interfaces and mechanisms used by various performers, even in real time. All these developments and the possibilities they offer configure what I call hyperimprovisation”.

4. The transformation of live performance

The consequences of this instrumentalization of the computer go beyond sound-creation. In many cases, the live presentation of this new kind of music, created through digital tools, does not fit the traditional stage models [1]. Where there used to be a performer or a whole band of various sizes, we now find a single musician, sitting in front of a computer. Something like a new cybernetic version of a man-orchestra, playing and manipulating any kind of sound and then mixing that with any other sounds, which are also modified.

We are therefore confronted with a new hybrid creative role between that of the composer, the performer and the computer programmer, a new typology of sound artist that we have previously referred to as "computer soloist". Processed and reorganized, the sounds generated by this "total musician" do not correspond to a given and visually recognizable source. The sum of all of these unidentified sounds form a single stream that flows through the sound amplification system. This music is, then, “acousmatic” [2].

Michel Chion refers to this invisibility of the original sound sources as "visual silence". This invisibility, a key element in the conceptual and aesthetic principles of music concrete, is based on an acousmatic listening experience and, very often, the spatialisation of sound through a speaker system that surrounds the audience. This music, sometimes described as "cinema for the ear", presents sound as an autonomous and decentralized event, unrelated to the stage or any other visual element. That’s why music concrete musicians are very often located at the back part of the hall or auditorium. Thus, the audience does not address its gaze towards a single point, such as in a conventional concert, where the stage appears as a concentric space in which the action takes place. Musique Concrète, as indicated by Chion, does not need the stage as a spatial reference; it only requires “active and scrutineer-like listening" of its audience.

For their part, futurist musicians staged their noise-based compositions, created from the mechanical sounds produced with the Intonarumoris, as a form of confrontation with traditional musical rules, including the scenographic design of the stage. Ultimately, they were trying to abolish an old musical regime, based on the logics of composition and the particular characteristics of traditional instruments, and replace them with a brand-new and revolutionary orchestra, characterized by its "voluptuous acoustics".

A few years after publishing "The Art of Noises" (1913), Luigi Russolo wrote a new text on the "controversies, battles and first intonarumori executions". The descriptions of those first concerts highlight the revolutionary will of futurist musicians, very close to the radical, transgressive and subversive attitude that we now recognize in punk music and its subsequent digressions (in fact, it is no coincidence that many of these have taken place, precisely, in the field of electronic and digital music creation). The following is a reproduction of an excerpt from Russolo’s chronicle on the uneven presentation of the very first public Intonarumori performance, which took place on April 21, 1914 at Dal Verme Theater, in Milan:

"That evening at Dal Verme, the teachers of the Royal Conservatory of Milan started to shout violently and insolently from their seats. They found, however, the formidable fists of my futurist friends Marinetti, Boccioni, Armando Mazza and Piatti. While I was still conducting the last part of "Meeting of Cars and Airplanes" they jumped among the audience and began a terrible fight that finished outside the theater. Eleven people needed medical care, but the futurists, all intact and triumphant, just marched peacefully for a drink at Cafe Savini".

The final aim of the futurist orchestra was to celebrate and reproduce the voluptuous sound of industry and war. The shock produced among the members of the audience, described by Russolo in his chronicle, is anything but surprising, if we consider what the musical canon was at that time. The idea of putting aside the instruments and replacing them with an orchestra of mechanical devices might have been considered, in itself, a serious threat to the musical logic.

Even if they don’t do it with the same incendiary will of futurist musicians, today’s digital sound-creators also break with traditional musical rules and the last century’s theatrical models. In this sense, computer music also represents a new way of post-industrial machine-celebration. The musicians who use the computer as a tool are no longer inspired by the sounds produced by industrial plants, combustion engines and war machinery, but rather, by the intangible flows of digital information which run and determine many aspects of human activity in the current historical moment.

We can affirm, then, that the assertion of a specific technological progress is central in the aesthetic principles of Futurism’s machinist-music, Musique Concrète compositions, and today’s digital music. Beyond their strictly acoustic similarities, the conceptual links between the compositions of Russolo, Schaeffer and those of digital musicians are quite obvious. In all three cases there is a certain “sonification” of technology, while an overcoming of standard musical instruments and traditional stage models is operated.

5. Scenographic horror vacui: the "desauratized" stage and the image in response

If the setting is not optimal (i.e., does not meet the conditions required for correct acousmatic listening), the empty space of the stage, occupied by a single solitary computer, can produce a strong feeling of horror vacui. Very often, projections and other kinds of visual elements are used in an attempt to counteract this feeling of emptiness. It is not a coincidence that video jockeys have become so common in recent years. New technological advances applied to moving-image generation and live video editing, very similar to that described in relation to sound creation, have resulted in the commercialization of incredibly powerful tools and new software that have completely revolutionized the way in which moving images can be treated in real time.

Nevertheless, at times, collaborations between a musician and a video jockey can be problematic. In many cases, the dialogue between image and sound ends up being a simplistic and banal addition of two unrelated elements. Needles to say, there are exceptions, but these are almost always the result of long established collaborations between a musician and a visual artist, and, thus, do not appear spontaneously. Unfortunately, the dialogue between live music and the ubiquitous projections run by video jockeys often becomes a pure anecdote, a simple sum of disjoint elements, its only purpose being to mask the horror vacui feeling we have just described.

As a response to this simple kind of audiovisual "addition" of music and some sort of visual elements, an increasingly important group of specialized artists, are currently taking advantage of new digital tools, and have chosen to work with both sounds and images simultaneously, considering these two elements from a non-hierarchical perspective. This type of audiovisual works, either run by one single artist or based on collaborations, are presented live, but can, in fact, belong to any other creative field: live-cinema, photography, media art, installation or any other discipline more difficult to categorize.

6. Discotheque inheritance

Nonetheless, having said that, it is of great importance to note that, apart from these similarities, electronic dance music and digital experimental music have to be considered as two completely different styles that pose very different relationships among space, the audience and the listening experience. Unlike what happens in a concert of experimental music, music in a club is not meant for careful listening, but rather, to be danced to. The visual elements that we find in the context of a club are not designed to fill an empty stage, but to create a unified, integrated, decentralized and communal space in which the dancing takes place as a form of social interaction.

7. The vertical stage and the mediatized experience

The increasing presence of screens and other kinds of image reproduction displays, is not an exclusive phenomenon of computer music concerts. The proliferation of screens is on the agenda of stadium rock shows, theater or dance performances and almost any mainstream sport event. The screen has become a ubiquitous element, inseparable from live experience. Regardless of what discipline or field they belong to, live events are nowadays deeply imbued by the current supremacy of the image.

Philip Auslander analyzes this phenomenon in the pages of his book "Liveness: Performance in a mediatized culture" (1999). "Today," Auslander writes, "live performance incorporates mediatization to the extent that the final experience becomes a product of technological means. We can think that this has been that way for a long time, if, for example, we consider that the electrical amplification of an instrument is also a form of mediatization. And, indeed, what we hear in this case is the vibration of a speaker, a sound reproduction captured with a microphone, not the original acoustic sound. But, recently, mediatization has been intensified in a wide range of genres and cultural contexts in which the action takes place in real time. (...) The audience is present at a live show, but hardly participates as such because their main experience is focused on a video projection (...) Many aspects of our current relationship with live performances suggest that media coverage has played a very significant effect on the configuration of the standard senses of the current historical moment (...) Or put differently: there was an absorption by the live performance of epistemology derived from technological means".

In Auslander’s words we hear an echo of Walter Benjamin’s ideas about how technical reproduction serves to shorten the distance between the receiver and the “Aura” of a certain artwork. "Approaching things", Benjamin writes, "is a demand of contemporary masses. Every day, what becomes clearer is the need to seize the object in its closest approach, but as an image and, even more often, as a copy, as a reproduction. And there’s no doubt about the difference between playback and real image (...) Uniqueness and permanence are as closely connected in the latter as are transitoriness and reproducibility in the former. The adjustment of reality to the masses and of the masses to reality is a process of unlimited scope, both for thinking, as well as for perception”.

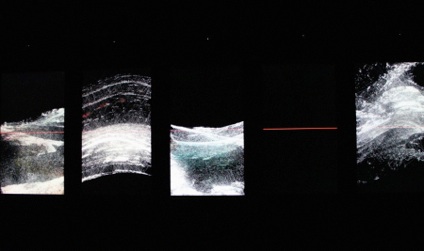

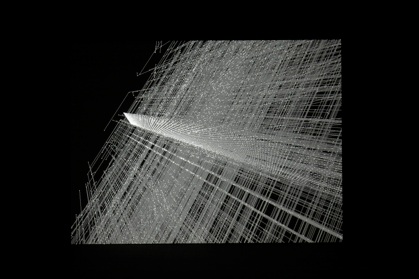

As pointed by Auslander, we find a gradual depreciation of human presence, which apparently can only be compensated through a design of live performance that resembles, as much as possible, that of the mediated perceptual experience. This happens, for example, in the audiovisual concerts of both Ryoji Ikeda and Ryoichi Kurokawa, in which the stage as such, completely disappears, and is replaced by one screen (or more than one in Kurokawa’s shows). Ikeda and Kurokawa stand, just like Musique Concrète performers, in the back part of the hall or auditorium and they are not seen by the audience unless they greet the audience at the end of the concert (something which, by the way, is not frequent). Thus, the experience of attending Ikeda’s and Kurokawa’s shows is more similar to watching a movie in a film-theater than going to a musical concert.

These two Japanese artists take the transformation of the stage to its limit and highlight the importance of image in these new kinds of audiovisual proposals. The two-dimensional surface of the screen has become a new form of "stage" that replaces the space that previously had been occupied by the musicians: a new vertical stage mainly based on the exploration of the multiple audiovisual correlations that nowadays can be achieved by using technological tools in real time.

The ontology of live performance has, therefore, changed, and technology has played a central role in this transformation. “In the current economy of repetition”, Auslander says, "live performance is little more than the vestige of an old historical order of representation, a remnant from which we cannot expect much more in terms of presence and cultural influence". Paraphrasing Benjamin, this means that, in the age of audiovisual works produced through editing, the decline of live performance seems inevitable.

NOTES

1.- The computer is, sometimes, combined on stage with other instruments. In this case, the scenographic model does not vary much from those of a traditional pop or rock concert. In fact, nowadays, the computer is a very common instrument, used in the keyboard and synthesizer sections of many bands. In these cases, the sounds it produces are not central to the music. In this study, we won’t focus on such "hybrid" proposals but only those in which the computer is used as a “solo” or main instrument.

2.- The word “acousmatic”, a key concept in concrete music, has its origin in the teachings of the Pythagorean School. The pupils of the Greek philosopher, known as the “akousmatikoi”, were asked to listen, in total silence, to the words of their master, hidden behind a curtain. This setting was intended to avoid any visual distraction of the students so that they might better concentrate on his words.

3.- This “auratic loss” is different, however, from the one that occurs when a CD, mp3 or any other kind of audio recording is played. Live music, even in computer music performances, maintains the theatrical space, the physical presence of the interpreter and, ultimately, a certain idea of uniqueness associated with the live performance.

REFERENCES

Auslander, Philip. Liveness. Performance in a mediatized culture. Routledge, 2008.

Benjamin, Walter. La obra de arte en la época de su reproductibilidad técnica. Editorial Itaca,

2003.

Cage, John. Silencio. Árdor Ediciones, 2002.

Chion, Michel. L’Art des Sons Fixés ou La Musique Concretèment. Editions Metamkine, 1991.

Dean, Roger. Hyperimprovisation: Computer-Interactive Sound Improvisation. A-R Editions, 2003.

McLuhan, Marshall. Comprender los medios de comunicación. Las extensiones del ser humano.

Ediciones Paidós, 1996.

Russolo, Luigi. El Arte de los Ruidos. Taller de Ediciones, 1998.

© Disturbis. Todos los derechos reservados. 2011